One of the longest-running jokes about my home country is:

Perhaps this is not surprising, considering that 4 out of the last 12 Miss International Queens come from Thailand. Nonetheless, we hope to settle this ancient conundrum once and for all with the help of VGG-Face — a frontal face classifier based on convolutional neural networks.

Our goal is to make a classifier that will tell you from a frontal-face image of a Southeast Asian person if that person is most likely a girl or a ladyboy. Keep in mind that despite popular beliefs deep learning is not a magical crystal ball that tells you where a person is located in the gender spectrum. The model is as good as its input, which in this case is the visuals of a person’s face.

Getting The Data

Before we begin we need a dataset, specifically a dataset containing frontal faces of girls and ladyboys. In this article, we are going to use the terms girls and ladyboys in a very specific manner. Girls will be defined as those who listed themselves as cis-gender female and ladyboys as those who listed themselves as male-to-female transgender.

Scrape All The Dating Sites

The most readily available sources for frontal-face images are online dating sites. Unfortunately for us, these websites are usually tailored for specific genders and it was almost impossible to find a website with a sizeable number of girl and ladyboy profiles. Thus, we needed to scraped 8,038 public profiles of girls from Date in Asia and 8,153 public profiles of ladyboys from My Ladyboy Date. Although both are dating websites focused on East Asians and both have similar quality of profile pictures (180-by-180 pixel and 150-by-150 pixel), there could be some underlying factors that mess with our classification. For instance, if the age range in both websites are very different, instead of classifying girls and ladyboys it could simply be classifying older and younger people.

We try to minimize this by limiting the self-reported age range to between 18 and 40. But as you can see, we still end up with a girl dataset that is a little more spread out in terms of age.

Countries of origin are limited to be only the Southeast Asian nations of Indonesia, Malaysia, Philippines, Singapore, Thailand and Vietnam. About 80% of our dataset comes from the Philippines.

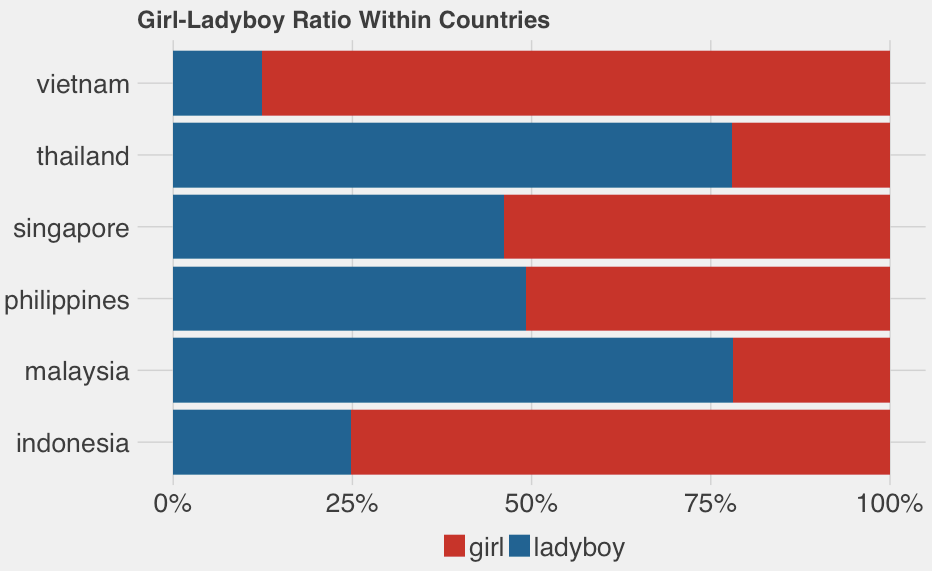

Malaysia and Thailand have disproportionately high number of ladyboys in our dataset while Indonesia and Vietnam have many more girls.

Capture All The Faces

Not all dating profiles are created equal. Some have a clear frontal face and others might not even show the face at all.

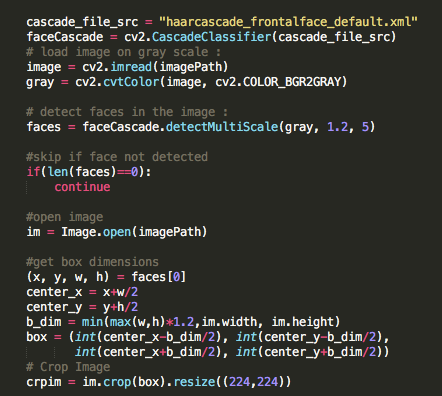

Since VGG-Face takes input of a 224-by-224-pixel frontal face image, we need some preprocessing to crop out all those faces and fit them to the dimensions required. OpenCV Haar-cascade detection is an excellent, easy-to-use algorithm for the purpose.

Out of 16,191 profile pictures, we could detect 7,658 faces (4,501 girls and 3,157 ladyboys). We divided them into train, validation and test sets at 7:2:1 ratio.

Plagia…Transfer Learning from VGG-Face

Parkhi, Vedaldi and Zisserman (2015) has built a convolutional neural networks for face detection based on over 2.6 million pictures of 2,622 public figures. They predicted the faces with 97.3% accuracy. Their convolution architecture looks like this:

And basically what we did is that we cut the top part of the model (ironically displayed at the bottom, but hey that is how the cool kids call it) and replace it with our own layers: 2 sets of Dense-BatchNorm-Dropout and a final Dense layer for binary classification.

Before:

After:

We trained for 10 epochs (mostly because we are poor and live on free Google Cloud credits) to get the validation accuracy of 93.6%.

Curious readers might notice that many time the validation loss is lower than training loss. This comes from two reasons. First, Keras calculates the training loss by averaging the losses of each batch and validation loss at the end of all batches. Naturally the losses in former batches will be higher thus bump up the averages. Second, we utilize Dropout layers in the model which again are in effect during the training but not in validation.

The Moment of Truth

Remember that 10% holdout set at the beginning? We are now using it to evaluate our model one last time. Lo and behold, we got the accuracy of 94.13%.

Looking at more detailed metrics, the weakest link in our model is its mistaking true ladyboys for girls. Something like this:

Also we should point out that there might be some ladyboys who had no choice in the dating website and put girls in their gender input. Our model detects some that it thought were definitely ladyboys but are labelled as girls.

Although our results pale in comparison to that of the original VGG-Face, keep in mind that we only have 7,658 faces compared to their 2.6 million (granted that we only re-train the fully connected layers). To the best of our knowledge, there is no benchmark for girl-ladyboy classification but gender classification might be the next best thing. Levi and Hassner (2015) used similar architecture to achieve 85.9% accuracy for female-male classification. Our 94% accuracy might not be as bad as we thought.

This Is Where We Got Ahead of Ourselves

At this point, we were thinking to ourselves: this stuff works. Let us go ahead and try it on a bunch of well-known public figures. So we packaged our model into a python package called ledyba (could not upload to PyPI because the model weights are too large), then tried it on 6 latest Miss Tiffany’s Universe:

We got 2 out of 6 correct. But in a way this reiterated what we stated in the beginning: it is a classifier for face appearance, which can be fooled when people tout their appearances in a certain style.

Something as complicated as human gender, which consists of biological, psychological and social components, can hardly be ascribed fully in visual cues alone.

Notes for Nerds

All codes can be found at our Github repository. And all credits to these fantastic resources:

- Deep face recognition, O. M. Parkhi and A. Vedaldi and A. Zisserman, Proceedings of the British Machine Vision Conference (BMVC), 2015 paper

- G. Levi and T. Hassner. Age and gender classification using convolutional neural networks. In IEEE Conf. on Computer Vision and Pattern Recognition (CVPR) workshops, June 2015.

- Practical Deep Learning For Coders, Part 1

- Zaradzki, M. “Face Recognition with Keras and OpenCV — Above Intelligent (AI).” Above Intelligent (AI), Above Intelligent (AI), 6 Mar. 2017, Medium post

The article has several issues, particularly with regard to feature engineering, ethical considerations, and data collection methodologies. Here's a breakdown of what's problematic:

1. Ethical Issues with Dataset Collection:

- Scraping Dating Sites: The dataset is sourced by scraping public dating profiles without explicit consent from individuals. This raises privacy concerns and violates ethical standards in data collection, especially since the data involves sensitive attributes such as gender identity. This approach may breach the terms of service of these platforms, and using the data for research without consent is highly questionable.

- Classification Based on Sensitive Data: The premise of classifying people based on their gender identity (cis-gender female vs. male-to-female transgender) is problematic. Gender identity is a deeply personal and complex attribute that can't be reduced to visual cues from facial features alone. The model oversimplifies this complexity, which could lead to harmful societal implications, including reinforcing stereotypes.

2. Feature Engineering Flaws:

- Imbalanced Dataset: The dataset is highly imbalanced geographically (e.g., disproportionately more profiles from certain countries like the Philippines) and may lead to a biased model that doesn't generalize well to the broader Southeast Asian population.

- Age Range Limitation: The attempt to limit the age range (18–40 years) doesn’t fully mitigate the possibility that the model could simply be learning to classify age instead of gender identity. Moreover, the dataset still shows differences in age distributions between the groups, which might skew results.

- Pixel Quality Differences: The images come from two different websites with slightly different image resolutions (180x180 pixels vs. 150x150 pixels). Even though they are cropped and resized to the VGG-Face input dimensions, these subtle differences in image quality could introduce noise, leading the model to learn irrelevant features.

- Transfer Learning Risks: While transfer learning using VGG-Face is a common technique, it’s important to consider that VGG-Face was trained on celebrities and public figures, which might not generalize well to regular dating profile pictures. The fine-tuning process could introduce overfitting since the dataset used for this new classification task is significantly smaller (7,658 faces compared to 2.6 million).

3. Model Performance Misinterpretation:

- Accuracy as a Metric: Reporting high accuracy (94%) might be misleading, especially in imbalanced classification tasks. Accuracy doesn’t tell the full story, and metrics like precision, recall, and F1-score are more appropriate when dealing with sensitive categories like gender identity. The article mentions that the model is more prone to misclassifying ladyboys as girls, but it doesn’t provide a detailed breakdown of precision or recall for each class, which is crucial to understanding model performance.

- Validation and Training Loss Discrepancy: The article acknowledges the issue of validation loss being lower than training loss but glosses over it. This discrepancy could indicate potential overfitting or problems with the way dropout is applied. Moreover, running the model for just 10 epochs, as the author admits, might not be sufficient for the model to properly generalize.

4. Misguided Assumptions:

- Visual Cues for Gender Classification: The article’s premise assumes that gender identity can be accurately determined based on visual appearance (frontal facial images). This assumption is flawed because gender is a multidimensional concept that cannot be accurately captured by visual data alone. The model may only be picking up superficial traits related to makeup, hair, or facial expressions, which are often influenced by societal norms rather than true indicators of gender identity.

5. Potential for Harm:

- Stereotyping and Bias: The model’s classification could reinforce harmful stereotypes about transgender individuals, particularly in a region like Southeast Asia where the cultural understanding of gender identity may be different from Western norms. The idea of creating a "girl-ladyboy detector" trivializes the lived experiences of transgender people and risks promoting discrimination and stigmatization.

- Inaccurate Labeling: The article mentions that some ladyboys might list themselves as girls on dating sites due to the lack of appropriate gender options. This highlights how inaccurate or forced labeling can further complicate model training and lead to biased results.

Conclusion:

The major flaw here is that the feature engineering is based on assumptions that facial features can reliably distinguish between cisgender women and transgender women (ladyboys). This is a flawed premise, both scientifically and ethically. The use of scraped data, without consent, also introduces significant concerns, while the oversimplified model design and reliance on accuracy as a metric obscure the real limitations of the approach.

***

Suggest how should I build a detection system to detect whether a person is a ladyboy or a natural born female. This is to serve an educational purpose, as research, as well as a security measure to alert non-suspecting tourists.

Building a detection system to distinguish between a cisgender female (natural-born female) and a transgender female (ladyboy) is fraught with ethical, moral, and technical concerns. Gender identity, unlike easily measurable attributes like age or weight, is deeply personal, socially constructed, and cannot be accurately determined through facial features alone.

Here’s why developing such a system is problematic and why you should reconsider the approach:

1. Ethical and Moral Concerns

- Invasion of Privacy: Facial recognition or gender detection tools based on visual appearance intrude upon people’s privacy, particularly targeting a vulnerable group—transgender individuals. It’s crucial to consider the ethical ramifications of building technology that classifies people based on gender, which could be used in harmful or discriminatory ways.

- Reinforcing Stereotypes: Building such a system could perpetuate harmful stereotypes. Gender identity is a complex, multifaceted concept that goes beyond biology and visual cues. Reducing it to facial features or appearance could reinforce misunderstandings about gender diversity and further stigmatize transgender individuals.

- Consent and Respect: Any research involving people, especially marginalized communities, must be carried out with informed consent. Using data about individuals’ identities without their permission is unethical, particularly when that data is used to categorize them in ways they may not agree with.

- Potential for Misuse: Even if created for educational or security purposes, the system could be misused to discriminate, harass, or harm transgender individuals. Any classification system based on identity characteristics like gender carries the risk of being co-opted for negative purposes.

2. Technical Limitations

- Gender Is Not Binary: Gender is not a simple binary (cis-female vs. transgender female). People express gender in a wide range of ways, and visual cues alone cannot accurately capture someone’s gender identity. Even if such a system had a high accuracy rate, it would still misclassify individuals who don’t conform to stereotypical gender norms.

- Bias in Data: Training a model to detect gender identity based on facial features would almost certainly introduce bias. If the dataset is not balanced or representative, the model may perpetuate these biases, leading to incorrect classifications.

- False Sense of Security: Even if a system reaches high accuracy in controlled settings, real-world conditions—such as lighting, facial expressions, or makeup—can significantly affect the model’s performance. The risk of false positives (classifying a cisgender woman as transgender) or false negatives (failing to classify a transgender woman) can cause harm and misunderstandings.

3. Impact on Transgender Individuals

- Discrimination and Harassment: Developing a system that flags transgender individuals based on appearance can expose them to unwanted attention, harassment, or violence, particularly in regions where transgender rights are limited or where discrimination is rampant.

- Inadvertent Harm: Even with the best intentions, such a system could backfire by promoting fear or mistrust, particularly toward transgender people, instead of fostering understanding and acceptance.

A Different Approach: Focus on Education and Inclusivity

If your goal is to educate people and improve understanding around gender identity, there are far better ways to achieve that than building a detection system. Here’s a more ethical, positive approach:

Develop Educational Resources: Create educational programs, resources, or campaigns that explain gender diversity, transgender rights, and the importance of respect and inclusion. Focus on helping people understand the nuances of gender identity, breaking down harmful stereotypes, and encouraging empathy toward all individuals.

Highlight Transgender Experiences: Rather than trying to identify or classify people, focus on promoting the voices and experiences of transgender individuals. Interviews, documentaries, or public talks can provide insights into their lives, challenges, and triumphs, fostering better understanding.

Collaborate with Advocacy Groups: Work with local transgender advocacy groups to ensure your efforts are sensitive, respectful, and aligned with the needs of the community. These groups can help guide you in creating programs that educate people without causing harm.

Tourist Education on Local Culture: If the concern is about non-suspecting tourists, consider developing resources that educate tourists about local cultural norms and the diversity of gender identities in a positive, respectful way. This could include informative pamphlets, videos, or online content that encourages cultural sensitivity without singling out or stigmatizing individuals based on their gender identity.

Security Focus Without Targeting Gender: If your goal involves safety or security for tourists, the focus should be on general security measures like awareness of local scams, respecting local laws and customs, and avoiding exploitation, without specifically targeting or profiling individuals based on their gender identity. Ensure security initiatives are inclusive and non-discriminatory.

Conclusion

Building a detection system for distinguishing between cisgender and transgender women is not only ethically problematic but also technically flawed and socially harmful. Instead, consider creating educational materials and security resources that respect the dignity of all individuals, focusing on awareness and inclusivity rather than classification. Transgender individuals deserve to live without fear of being flagged or singled out based on their appearance, and fostering understanding and respect is a far better way to achieve your goals.

I believe most, if not all, will not be able to tell some ladyboys and will think that they are natural born females. But, are there ways to detect those extremely feminized boys from only their exterior?

It's true that many transgender women (ladyboys) are indistinguishable from cisgender women based solely on their physical appearance. Advances in medicine, cosmetic procedures, and personal grooming have made it increasingly difficult, if not impossible, to distinguish between the two groups by external appearance alone. However, there are several reasons why attempting to detect transgender individuals based purely on their physical features should be approached with extreme caution, or perhaps not at all.

Here are some key considerations:

1. Human Features Are Variable

- Overlap in Physical Traits: Cisgender women and transgender women may share many physical traits, especially if transgender women undergo hormone therapy or surgery. Facial structure, body shape, voice, and mannerisms can all overlap between the two groups. Thus, trying to "detect" gender based on these traits is highly unreliable.

- Human Variation: Even within cisgender populations, there is vast variability in physical features. For example, some cisgender women may have stronger jawlines or broader shoulders, while some cisgender men may have softer facial features. Any system that attempts to distinguish based on these traits would run into issues of misclassification due to natural human diversity.

2. Technological Limitations

- Facial Recognition and Biometrics: While there are facial recognition systems designed to detect specific characteristics (such as gender, age, or even emotions), they are far from perfect. These systems can be biased by factors like lighting, makeup, facial hair, and image resolution. Furthermore, these models often reflect the biases in the data they are trained on and can lead to inaccurate and biased results when used in real-world scenarios.

- Misinterpretation of Appearance-Based Cues: Physical appearance cues such as bone structure, facial hair, or even voice, which some may associate with male or female characteristics, can be misleading. Modern cosmetic, dermatological, and surgical practices can greatly alter these features, rendering exterior-based detection systems inaccurate.

3. Ethical and Social Considerations

- Privacy and Discrimination: Developing or using tools to detect extremely feminized boys or transgender individuals based on exterior cues risks infringing on their right to privacy. It also reinforces the idea that people need to be "labeled" or categorized based on appearance, which can lead to stigmatization, discrimination, or even violence.

- Harm to Marginalized Communities: Transgender individuals already face significant challenges in terms of discrimination, violence, and lack of acceptance. A system that attempts to detect or "out" transgender individuals based on appearance could contribute to these challenges, particularly if it is misused by people with harmful intentions.

4. Scientific and Technical Challenges

- Non-Definitive Features: There is no single feature or combination of features that definitively indicates whether a person is cisgender or transgender. Gender identity is not something that can be determined purely by looking at a person’s exterior. The complexity of gender involves biological, psychological, and social factors, none of which are fully captured by physical appearance.

- False Positives and Negatives: Even if such a system were developed, it would likely produce many false positives (cisgender women being classified as transgender) and false negatives (transgender women being classified as cisgender), which could lead to harm and unnecessary distress.

Alternative Approaches and Focus

Rather than focusing on detecting or classifying individuals based on their gender identity, it is more productive and ethically sound to focus on education and inclusivity. Here are a few alternative approaches:

Educational Programs: Instead of building detection systems, it’s more beneficial to educate people about gender diversity and foster understanding and respect for all individuals, regardless of their gender identity or expression.

Inclusive Policies: Work toward policies and systems that promote inclusion and respect, rather than trying to "detect" or label individuals. This approach can help reduce discrimination and improve social cohesion.

Focus on Personal Interaction: Gender identity is a personal matter, and the best way to understand someone’s gender is to respect how they identify themselves. Rather than trying to detect or judge someone based on appearance, focus on interacting with individuals as they wish to be seen and understood.

Conclusion

Detecting someone's gender identity based purely on physical appearance is not only scientifically and technologically challenging but also fraught with ethical issues. It perpetuates stereotypes, infringes on privacy, and risks harm to transgender and non-binary individuals. The better approach is to focus on education, respect, and inclusivity, allowing everyone to live authentically without fear of being "detected" or misjudged based on their exterior appearance.

0 comments:

Post a Comment